In my essay on the natural causes of

Pacifica’s Coastal Erosion, I reported on how California’s coast has still not

reached an equilibrium with sea levels that rose at the end of the last ice

age. I also suggested the media and a few scientists give the public a false

impression that all natural weather phenomenon and coastal erosion have been

worsened by CO2-driven climate change. Pointing to a few leading perpetrators I

wrote, “After centuries of scientific progress, Trenberth and his ilk have

devolved climate science to the pre-Copernican days so that humans are once

again at the center of the universe, and our carbon sins are responsible for

every problem caused by an ever-changing natural world.” Such a strong

statement deserves further elaboration. Although a highly intelligent

scientist, to support his obsessive claims that CO2-caused climate change has

worsened every extreme event, Trenberth has been tragically undermining

the very foundations of scientific inquiry by 1) reversing the proper

null hypothesis, 2) promoting methods that can not be falsified, 3) promoting

fallacious arguments only by authority, and 4) stifling any debate that

promotes alternative explanations.

Dr. Trenberth, via his well-groomed

media conduits, preaches to the public that every extreme event - flood or

drought, heat wave or snowstorm - is worsened by rising CO2. To fully

appreciate the pitfalls of his “warmer and wetter” meme, you need to look no

further than Trenberth’s pronouncements regards the devastating Moore, Oklahoma

tornado. Although Trenberth admits, “climate change from human influences is difficult to perceive and detect because

natural weather-related variability is large”, in a Scientific American interview, arguing only from authority he cavalierly attributed

CO2 climate change to a “5 to 10 percent effect in terms of the instability and

subsequent rainfall, but it translates into up to a 33 percent effect in terms

of damage.” But in contrast to Trenberth’s “warmer and wetter world”

assertions, there was no warming contribution. Maximum temperatures in Oklahoma

had been cooler since the 1940s.

Clearly Trenberth’s simplistic “warmer and wetter” world assertion

cannot be applied willy-nilly to every region. Climate change is not globally

homogenous. It is regionally variable and the global

average temperature is a chimera of that regional variability.

Furthermore his claim of a “wetter world” is a hypothetical argument not

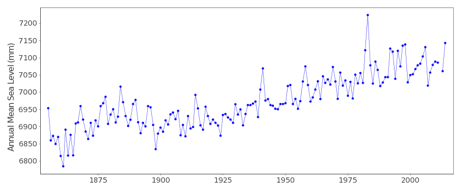

supported by evidence. As seen in the graph below from the peer-reviewed paper Weather And Climate Analyses Using Improved

Global Water Vapor Observations, there is little evidence of a steady increase

in water vapor paralleling rising CO2. Even Trenberth’s

own studies have concluded, “Total Precipitable

Water vapor [TPW] variability for 1988–2001 was dominated by the evolution of

ENSO [El Ninos].” The El Nino effect is evidenced by peak water vapor

coinciding with the 1998 El Nino. Since 1998, the atmosphere has been arguably

drier, contradicting his CO2 driven wetter world hypothesis. Despite a multitude

of contradictions, to garner support for his theories Trenberth

insists on reframing the scientific method by reversing the null hypothesis. Instead

of determining if CO2 had an effect on extreme weather beyond what natural

variability predicts, Trenberth

wants scientists and the public to blindly assume,

“All weather events are affected by climate change because the environment in

which they occur is warmer and moister than it used to be.”

|

| Trend in Total Precipitable Water (TPW) Contradicts Trenbert's Assertions |

In contrast to simply making the “Trenberth

assumptions”, climate scientists use two main strategies to extract any

possible CO2 effect. First based on physics, the consensus believes early

changes in CO2 concentration exerted no significant climate impact, and extreme

events happening before 1950 were due to natural variability. Thus historical

analyses compare extreme events before and after 1950 to determine how they

differ. But Trenberth

has been maneuvering to make such CO2 attribution studies non-falsifiable by

stripping recent extreme weather events from that historical framework. In the Washington Post, Chris Mooney pushes Trenberth’s “new

normal”

quoting,

“All storms, without exception, are different. Even if most of them look just like the ones we used to have, they are not the same.”

Trenberth’s “new

normal” side steps historical scientific analyses. One would think a good

investigative reporter would question Trenberth’s undermining of that

scientific methodology, but Mooney is not a scientist. Ironically Mooney’s

claim to fame was a book “The Republican War On Science”, about which

Washington Post’s Keay Davidson wrote, "Mooney is like a judge who

interprets a law one way to convict his enemies and another way to acquit his

friends.” Evidently that is just the kind of journalist Trenberth and the Washington Post wanted. Mooney left Mother

Jones and was hired by Washington Post

to write columns on climate change and serves as one of Trenberth’s media

conduits. (Btw: the Real Science website is a great place to view headlines from the past

illustrating great similarities between past and present extreme weather

events.)

The second strategy relies on models that compare “the probability

of an observed weather event in the real world with that of the ‘same’ event in

a hypothetical world without global warming.” But this approach incorrectly

assumes the natural variability is well modeled. Often the

model’s “world without global warming” is assumed to be stationary but with a

lot of “noise”. But that tactic generates false probabilities because our

natural climate is not stationary but oscillating. In 2012 climate experts met

at Oxford University to discuss such attribution studies and the highlights were reported in Nature.

Many experts suggested that due to “the current state of modeling any

attribution would be unreliable, and perhaps impossible…One critic argued that, given the insufficient

observational data and the coarse and mathematically far-from-perfect climate

models used to generate attribution claims, they [attribution claims] are

unjustifiably speculative, basically unverifiable and better not made at all.

And even if event attribution were reliable, another speaker added, the notion

that it is useful for any section of society is unproven.”

(Such concerns raise another

question: if attributing a CO2 effect on any event like a heat wave or drought

is nearly impossible, how reliable is any attribution of a global average temperature

if those same extreme heat waves and droughts skew the global average?)

Color me an old-fashioned scientist,

but our best practices demand we correctly establish the boundaries of natural

climate change before we can ever assume rising CO2 has worsened weather events.

But Trenberth and his ilk insist on reversing

the null hypothesis. Instead of asking if a weather event exceeded natural

variability, Trenberth insists we rashly assume CO2 has already worsened the

weather. However most scientists share my concern about his maneuverings. As

Professor Myles Allen from Oxford University said, “I

doubt Trenberth’s suggestion [reversing the null hypothesis] will find much

support in the scientific community.”

Trenberth’s attempt to reverse the null hypothesis has been discussed previously

by Dr. Judith Curry and by top rated skeptic blogs, and in a published paper by Dr. Allen “In Defense of the Traditional Null Hypothesis.

Nonetheless many papers are now

being published that simply make Trenberth’s assumptions and there is a growing

rift between researchers who adopt Trenberth’s “new normal” tactics versus “old

school” scientists. The different resulting scientific interpretations are well

illustrated in peer-reviewed publications on droughts and heat waves.

A bank account serves as a good

analogy to illustrate drought stress.

Financial (hydrologic) stress results from changes in income (rain and

snow) versus withdrawals (evaporation and runoff) and the buffering capacity of

your reserves (lakes, wetlands and subsurface water). Old school science would

demand researchers eliminate all confounding factors affecting hydrological

stress before claiming any effect by a single variable like CO2. Here are a few

confounding factors that are seldom addressed in papers that blame a greenhouse

effect for higher temperatures and stronger heat waves and droughts.

i.)

Clear dry skies increase

shortwave (solar) insolation, while simultaneously decreasing downward long wave radiation (i.e. decreasing the

greenhouse effect). Reasons for

this were discussed in an essay Natural Heat Waves and have been verified by satellite data (Yin 2014). Higher temperatures happen despite a reduced greenhouse effect.

ii.)

In

arid and semi-arid regions like the American Southwest, precipitation

shortfalls not only decrease the hydrologic “income” but also decrease

evaporation. If there is no rain, there is nothing to evaporate. The

decrease in evaporative cooling raises temperatures (Roderick 2009, Yin 2014) . Drier surfaces have a lower heat capacity so that

incoming energy that was once converted to latent heat of evaporation is now

felt as sensible heat that rapidly raises temperatures. Trenberth’s global

warming claims often have the tail wagging the dog by assuming higher

temperatures cause drier soils. Drier soils cause higher temperatures.

iii.)

Natural

cycles cause decadal oscillations between dry and wet years. Recent

research (Johnstone 2014) report the past 110 years of climate change in

northwestern North America can be fully accounted for by the multi-decadal

Pacific Decadal Oscillation (PDO).

The PDO alters the pattern of sea surface temperatures, which alters

atmospheric circulation affecting transportation of warmth from the south, and

moisture from the ocean. The PDO produces dry cycles that not only reduce

rainfall but can increase temperatures via mechanisms i and ii. The negative

PDO experienced over the last 15 years promoted more La Ninas that make

California drier.

iv.)

The

buffering effect of hydrologic reserves has increasingly dwindled. Wetlands

have been drained and degraded watersheds have drained subsurface waters

resulting in reduced evapotranspiration. The loss of California wetlands since

1820 has been dramatic (Figure 9) generating a decreasing trend in evaporative

cooling. Furthermore spreading urbanization has relegated natural streams to

underground pipelines. Urbanization has increased runoff (hydrologic

withdrawals) as rainfall is increasingly shunted into sewer systems and no

longer recharges whatever remaining landscapes are not paved over with heat

retaining materials. This increasing reduction in our moisture “reserves”

increases regional dryness and has not been balanced by irrigation.

|

| California's Lost Wetlands Has Reduced Evaporative Cooling |

The difference

between “old school” science and Trenberth’s “new normal” is illustrated in

contrasting interpretations of recent extreme droughts and heat waves. For

example NOAA’s Randall Dole attributed the 2010 Russian heat wave to a lack of

precipitation and a high-pressure blocking pattern that enhanced surface

feedbacks. Dole had been studying the effects of blocking patterns for over 30

years since his research days at Harvard. Blocking high-pressure systems pump

warm air northward on the systems western flank and trap that heat while clear

skies increase insolation. In 1982 Dole had mapped out 3 regions most prone to

blocking highs due to undulations of the jet stream. Those same blocking highs are also implicated in our more

recent heat waves that “Trenberth’s school of climate change” trumpet as

worsened by CO2. The 3 regions are 1.) Northeast Pacific where the “Ridiculous

Resilient Ridge” typically produces California’s drought, 2) North Atlantic

that affects Western Europe’s droughts and 3) over northern Russia generating heat

waves every 20 years such as the 2010 heat wave.

Dole concluded in 2011 “the intense 2010 Russian heat wave was mainly due

to natural internal atmospheric variability.” Dole’s historical analysis noted,

“The July surface

temperatures for the region impacted by the 2010 Russian heat wave show no significant warming trend over the prior

130-year period from 1880 to 2009” and he noted similar but slightly less

extreme heat waves had occurred periodically over the past 130-year period. The

more extreme temperatures could be attributed to “surface feedbacks” from the

early season drought and landscape changes. Based on a proper null hypothesis

Dole concluded, “For this region an

anthropogenic climate change signal has yet to emerge above the natural

background variability.”

Whether or not

Dole is correct, Dole is a climate scientist we can trust. A trustworthy

scientist, who cannot detect a difference between a recent extreme event and natural

extreme events from the past, will simply report that they cannot detect an

anthropogenic signal. Whether or not there was a CO2 global warming effect

remains to be tested. In contrast less trustworthy scientists will push a

non-falsifiable CO2 effect and argue natural variability “masked CO2 warming,”

a warming Trenberth insists we must assumes to be present.

Trenberth also appears to hate any

scientific claim that weather was just weather. Accordingly he attacked Dole’s

“heresy” via his internet attack dogs. Joe Romm blogged, “Monster Crop-Destroying Russian Heat Wave To Be

Once-In-A-Decade Event By 2060s (Or Sooner)”, which provided Trenberth an opportunity to

denigrate Dole’s analysis in a way not allowed in more staid scientific journals.

Trenberth maligned Dole’s analysis as “superficial and does not come close to answering the question in an appropriate

manner. Many statements are not justified and are actually irresponsible. The question itself is ill posed because we never

expect to predict such a specific event under any circumstances, but with

climate change, the odds of certain kinds of events do change.”

Seriously? Dole’s research was

irresponsible because it found no CO2 effect?!? The great value of science to

society is that it provides us with some measure of predictability that guides

how we best adapt to future events. Dole simply asked, “Was There a Basis for

Anticipating the 2010 Russian Heat Wave?” and concluded neither past weather

patterns, current temperatures trends, historical precipitation trends or

increasing CO2 could have prepared Russia for that event. The only

predictability was that similar events had happened every 2 or 3 decades.

Trenberth has persistently argued the only “right question” to ask is “how much

has CO2 worsened an extreme event, but Dole asked a more useful question. What

triggers extreme Russian droughts and heat waves every 20 to 30 years?

Dole’s models, forced with sea ice or ocean temperatures, did not

simulate the observed blocking patterns over Russia. Based on several modeling

experiments Dole concluded results were “consistent with the interpretation

that the Russian heat wave was primarily caused by internal atmospheric

dynamical processes rather than observed ocean or sea ice states or greenhouse

gas concentrations.” Yet despite Dole’s examination of a great breadth of contributing

factors, Trenberth attacked Dole for being “too narrowly focused” because, of

all things, Dole did not include July flooding in China and India, or record

breaking floods in Pakistan in August. Trenberth was suggesting that that those

floods were due to warmer oceans and thus global warming should have been

blamed for worsening the Russian heat wave even though Dole’s modeling studies

found no such connection.

But Trenberth had the tail wagging

the dog - again! Due to the clockwise motion of a blocking High, warm air was

pulled poleward and accumulated on the western side of the system driving the

heat wave. In contrast the same system pushed colder air equatorward along the

system’s leading eastern edge. As discussed in Hong 2011, when that cold air was pumped southward, it

collided with warm moist air of the monsoons, and it was that cold air that increased

the condensation that promoted extreme precipitation in some locales. Nonetheless,

determined to connect CO2 warming to the Russian heat wave, it was Trenberth

who was not asking the right questions. He should have been asking how much did

a naturally occurring blocking pattern contribute to the southern Asian floods.

As was the case for the Russian heat

wave, analyses of the historic heat wave for Texas and the Great Plains revealed

no warming trend over the latter 20th and the 21st

century. In Hoerling 2013, a team comprised of ten climate experts, mostly

from NOAA, examined the Texas drought and heat wave. They reported “no

systematic changes in the annual and warm season mean daily temperature

have been detected over the Great Plains and Texas over the 62-yr period from

1948 to 2009 consistent with the notion of a regional ‘‘warming hole’’. Indeed, May–October maximum temperatures over the

region have decreased by 0.9°C.”

Thus those experts concluded the absence of observed warming since 1948 cautioned

against attributing the heat wave and drought to any warming, natural or CO2

related (However CMIP5 modeled results suggested a 0.6°C warming effect since 1900). Likewise satellite data

revealed a radiative signature of a reduced greenhouse effect and increased

solar heating (Yin 2014).

In contrast Trenberth

claimed on Romm’s blog,

“Human climate change adds about a 1 percent to 2 percent effect every day in terms of more energy. So

after a month or two this mounts up and helps dry things out. At that point all

the heat goes into raising temperatures. So it mounts up to a point that once

again records get broken. The extent of the extremes would not have occurred

without human climate change.” But Trenberth’s 1% per day CO2 attribution seems

absurd in a regions where maximum

temperatures had decreased. His warmer and wetter world meme only

obfuscated the issues and Trenberth was again asking the wrong question. The

correct question was how much had the drought lowered surface moisture and

reduced evaporative cooling that caused

higher temperatures? In a region where there had been no increase in

maximum temperatures, the amplified temperatures for this extreme weather event

were likely the result of natural surface feedbacks caused by a lack of rain.

NOAA’s drought task force also reported

on the following Great Plains drought and heat waves. They concluded this

drought was likewise due to natural variability stating, “Climate simulations

and empirical analysis suggest that neither the effects of ocean surface

temperatures nor changes in greenhouse

gas concentrations produced a substantial summertime dry signal.” But no

matter the level of expertise, Trenberth via his internet attack dog Joe Romm

and his blog assailed the Drought Task Force with a less than an honest

account. Trenberth assaulted their conclusions, “It fails completely to say

anything about the observed soil moisture conditions, snow cover, and snow pack

during the winter prior to the event in spite of the fact that snow pack was at

record low levels in the winter and spring.” (But Trenberth’s denigration contrasted with a document-search

for the term “soil moisture”, which found it was mentioned about 15 times

including the sub-section title in big bold letters “Simulations of Precipitation and Soil Moisture”.) Trenberth’s

mugging continued, “There is no discussion of evaporation, or potential

evapotranspiration, which is greatly enhanced by increased heat-trapping

greenhouse gases. In fact, given prevailing anticyclonic conditions, the

expectation is for drought that is exacerbated by global warming, greatly

increasing the heat waves and wild fire risk. The omission of any such

considerations is a MAJOR failure of this publication.”

But that was a very odd comment for

a top climate scientist! Anticyclonic conditions predict droughts will be

exacerbated by natural feedbacks, not by global warming.

And again Trenberth failed to ask

the right questions. If he believed a greenhouse effect exacerbated the drought

by increasing evaporation, then he needed to ask why satellite data has been

showing reduced downward long wave radiation and increased solar insolation

that typically occur in dry clear skies? In contrast to Trenberth’s

obfuscations, the Task Force had extensively discussed the meteorological

conditions that inhibited the transport of moisture from the Gulf of Mexico,

resulting in reduced soil moisture. Despite low snowpack, soil moisture had not

been deficient in the spring. It was the lack of moisture transported from the

Gulf that reduced summer soil moisture that raised temperatures and exacerbated

the drought. Furthermore modeling experiments performed by the Task Force found

precipitation was not affected by changes in sea surface temperatures or

greenhouses gases. And historical analyses (as seen in Figure 7) revealed that

despite global warming Central USA temperatures were lower than expected given

the extreme dryness and expected surface feedbacks. So again Trenberth failed

to ask the right questions. Why were temperatures higher during the droughts of

the 30s when there was no increased greenhouse effect?

The 2011-2015

drought in California is the most flagrant example of the Trenberth Effect.

California’s droughts are most often associated with natural La Nina conditions

and a blocking ridge of high pressure that inhibits the flow of moisture from

the Pacific to California. Another thorough analysis by NOAA’s Drought Task

force again concluded, “the recent drought was dominated by natural

variability.” In an interview with the NY Times co-author Dr. Hoerling stated, “It is quite clear

that the scientific evidence does not support an argument that this current

California drought is appreciably, if at all, linked to human-induced climate

change.”

In support of the Drought Task

Force’s conclusions, every study of the California drought has reported the

major factor driving recent drought has been episodic rainfall deficits.

Nonetheless despite the extreme rainfall shortfall there was no evidence of any

trend in precipitation amounts or variability that could explain the recent

lack of precipitation. Ridging patterns have always reduced rainfall, and the

lack of a trend in precipitation contradicts recent claims that greenhouse

gases are increasing the likelihood of a ridging pattern that was blocking

precipitation (Swain 2014). Nonetheless media conduits for alarmism like

Slandering Sou promoted Swain’s arguments. But Slandering Sou is not a

scientist nor has she ever published any meaningful science. In contrast

climate scientists like Dr. Cliff Mass readily pointed out Swain’s faulty analyses.

Furthermore there is no long-term

precipitation trend as seen in the 700-year California Blue Oak study by Griffin 2015. The dashed blue line represents the extreme

precipitation anomaly of 2014. For the past 700 years similar extreme

precipitation shortfalls have equaled or exceeded 2014 several times every

century. From a historical perspective, we can infer there is no evidence that

rising CO2 has increased that ridging pattern that reduces rainfall and causes

drought. More severe and enduring droughts happened during the Little Ice Age when

temperatures were cooler. Clearly land managers and government agencies should

prepare for severe periodic droughts whether or not CO2 has any effect or not,

testifying to why the Oxford attendees saw little usefulness in CO2 attribution

studies.

|

| No Trend in California Precipitation |

As expected Trenberth’s attack dogs assailed

NOAA’s California report because it attributed drought to natural variability. Romm

blogged that the drought would Soon Be More Dire. Over at the Washington Post, Mooney’s fellow yellow

journalist Darryl Fears wrote “California’s

terrifying climate forecast: It could face droughts nearly every year.” But Fears’ projection has already failed. Despite no

precipitation trends, several authors blamed the California drought on

extremely high temperatures. Michael Mann argued “Don’t

Blame It on the Rain”. Blame it on global warming. To support

warming assertions Trenberth blogged a fanciful analogy, “The extra heat from the

increase in heat trapping gases in the atmosphere over six months is equivalent

to running a small

microwave oven at full power for about half an hour over every square foot of

the land under the drought.” If that wasn’t fearful enough Trenberth added, “No

wonder wild fires have increased!”

But historical analyses suggest the

universe had unplugged Trenberth’s “microwaves” over most of California since

1940s, and wildfires were much worse during the Little Ice Age. As shown in the illustration below from Rapacciuolo

2014, observations show most of California, like Texas, had experienced a decline in the maximum temperatures

since 1940. If maximum temperatures have not risen there has been no

accumulation of heat and California appears to be insensitive to rising CO2.

The question that Trenberth failed to ask is why did maximum temperatures

decline in his “warmer and wetter” world?

|

| 70-year Cooling Trend for Maximum Temperatures in Half of California |

Mao 2012 analyzed the drought in California’s Sierra Nevada

and likewise found no trend in maximum temperatures. However assuming the

minimum temperature trend was an expression of anthropogenic warming, he used

the minimum trend to model CO2-warming effects on drought. But minimum

temperatures have little effect on drought. Relative humidity is highest and

approaches the dew point during the minimum. Due to daytime surface-heating,

turbulent convection peaks around the maximum temperatures and increases

evaporation and dries the soil dramatically. But turbulent convection is

virtually non-existent when minimum temperatures are measured. Accordingly

based on the minimum temperature trend, Mao 2012 found “warming may have slightly exacerbated some

extreme events (including the 2013–2014 drought and the 1976–1977 drought of

record), but the effect is modest; instead, these drought events are mainly the

result of variability in precipitation.”

That brings us to the most recent example of how

Trenberth’s “new normal” has undermined science. Williams

2015 claimed CO2 warming had worsened the California

drought by 8 to 27%, a claim that was trumpeted by press releases and blogs. To

his credit Williams did use a much better version of the Palmer Drought

Severity Index (PDSI) that takes into account the physical processes causing a

drought. He also pointed out that simpler versions of the PDSI, used by Diffenbaugh 2015, Griffin

2014 and others, had artificially amplified and

overestimated the contribution of temperatures to drought (Sheffield

2012, Roderick

2009).

However Williams claimed to have separated

anthropogenic warming from natural warming, and used the reversed null

hypothesis to do so. Williams warned that he had assumed any warming trend was

all anthropogenic. To determine natural temperature variability he simply

subtracted his hypothetical anthropogenic-warming trend from California’s

observed temperatures. Whatever remained was deemed natural variability. By

assuming CO2 is responsible for any warming trend, alleviates climate

scientists from the more arduous task of determining natural temperature

variability. Furthermore instead of separating out the confounding factors that

are known to contribute to higher temperatures, such as the PDO (Johnstone

2014) or landscape feedbacks (as discussed above), Williams simply acknowledged

he did not account for those factors as a caveat, then went on to promote his

human influence estimated in press releases suggesting he had scientifically

linked CO2 warming to drought severity. Without accounting for all factors,

Williams’s study was not a scientific evaluation, but simply an opinion piece.

Still, as might be expected, Trenberth weighed in calling Williams analyses

reasonable but conservative, and recommended that he drop the lower end (8%) of

estimated human contribution.

But Williams and Trenberth never asked the right

questions. How can scientists assume an anthropogenic warming trend if it

hijacks the earlier warming trend before 1950, a trend that the consensus

believes was all natural? How can scientists assume an anthropogenic warming

trend when there no warming trend for maximum temperatures since 1950? How can

scientists blame global warming for worsening droughts when other factors like

the PDO, the drying of the California landscape and surface feed backs were

never accounted for?

And more importantly, why should people ever

trust Trenberth’s “new normal” science that undermines the very foundation of

scientific inquiry. It is more than irksome that my taxes help pay Trenberth’s

high salary and allow him to undermine the foundations of scientific inquiry.

In part two: Trenberth’s snowjob, I examine

Trenberth’s fallacious argument that global warming causes more snow.